cui_spring2021

overview

- check in

- presentations: Topics on Accessibility

- Facial Recognition as UI

- Gesture Recognition as UI

- Coding examples with Machine Learning and Teachable Machine

- Final Project requirements

Accesibility presentations

How the blind use technology to see the world

Austin Seraphin - video

Austin’s website.

Voice User Interfaces - Austin was on the committee for accessibility of these interactive fiction games who has posted a report on their research.

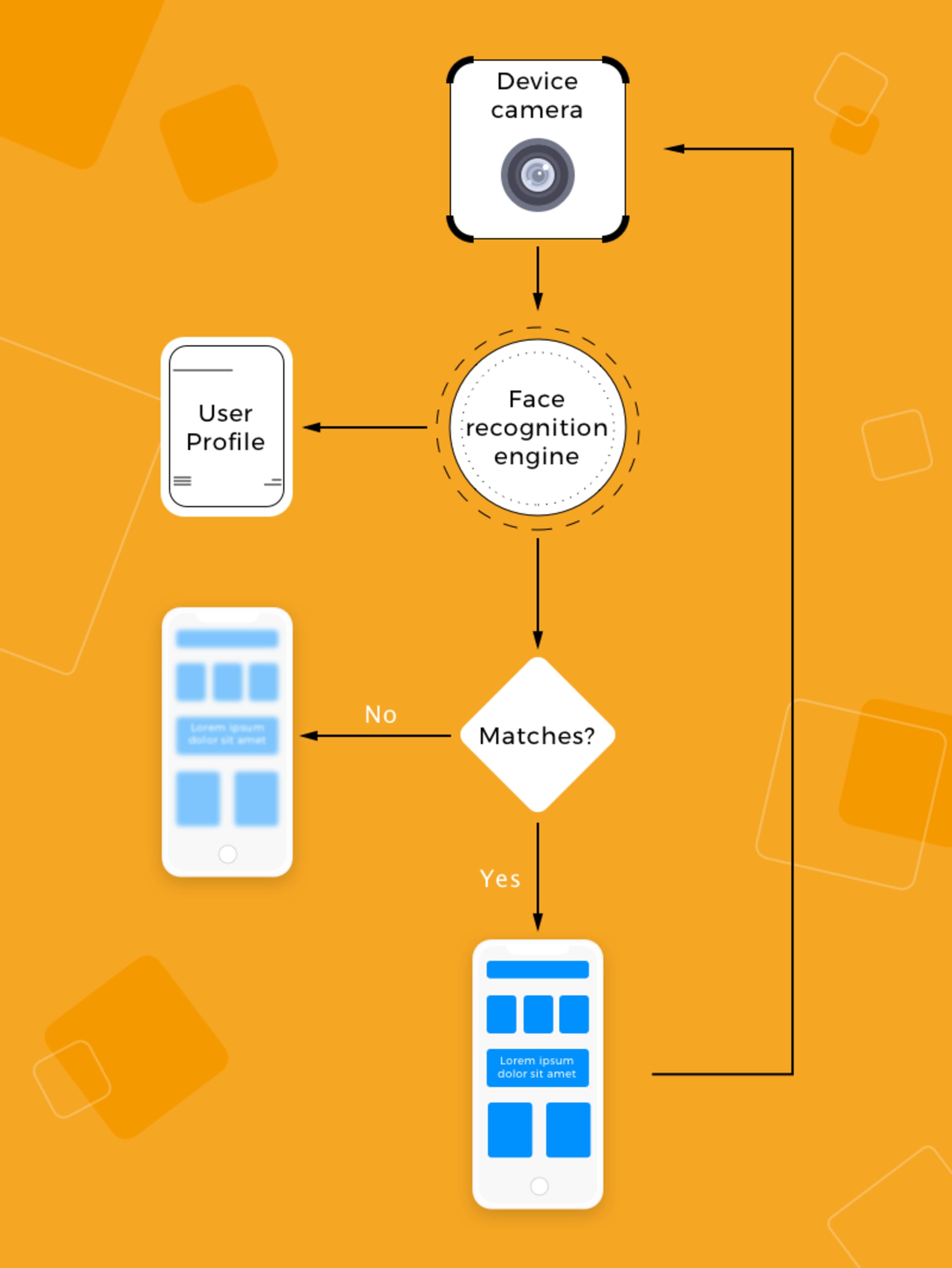

Facial Recognition

How does it work? Five step process:

- Facial detection / tracking

- Facial alignment

- Feature extraction

- Feature matching

- Facial recogntion

Facial recognition in application interfaces

New facial recognition and machine learning interfaces

Project Euphonia - Helping everyone be understood - video - link to project

Facial Recognition - ethical concerns

What Facial Recognition Steals From Us - video

Human faces evolved to be highly distinctive; it’s helpful to be able to recognize individual members of one’s social group and quickly identify strangers, and that hasn’t changed for hundreds of thousands of years. Then in just the past five years, the meaning of the human face has quietly but seismically shifted. That’s because researchers at Facebook, Google, and other institutions have nearly perfected techniques for automated facial recognition. The result of that research is that your face isn’t just a unique part of your body anymore, it’s biometric data that can be copied an infinite number of times and stored forever. In this video, we explain how facial recognition technology works, where it came from, and what’s at stake. –ReCode, Vox Media

Reading on ethical issues of facial recognition

image modified by ACLU, original by colorblindPicaso on Flickr

- Obscurity and Privacy by Evan Seligner and Woodrow Hartzog, from Routledge Companion to Philosophy of Technology (Joseph Pitt & Ashley Shew, eds., 2014 Forthcoming)

- Face Recognition and Privacy in the Age of Augmented Reality by Alessandro Acquisit, Ralph Gross and Frederic Stutzman in the Journal of Privacy and Confidentiality, 6(2), 1, 2014

- FBI and ICE Find State Driver’s License Photos Are a Gold Mine for Facial Recognition Searches in Technology, The Washington Post, September 7, 2019

- Are Stores You Shop at Secretly Using Face Recognition on You? by Jenna Bitar and Jay Stanley, ACLU Blog, March 26, 2018

- The Secretive Company That Might End Privacy as We Know It by Kashmir Hill, The New York Times, January 18, 2020

Examples of Facial Recognition UI

- How Facial Recognition works at CaixaBank ATMS - video

Coding examples with Machine Learning and Teachable Machine

Training an Image Classification Model

- Collect Data

- Have 2 kinds of images of something

- Label those images - (these are called “classes”)

- how many? - experiment. maybe 25 - 50 images per category.

- Click Train Model and Do not switch tabs as it is training in the browser live!

- This image classification is using MobileNet and a pretrained model (of a Convolutional Neural Network) to do Transfer Learning

- When finished, test it. You can add more classes if you like (such as additional poses, or additional images).

- It’s good? Now we must save by clicking to Export model. Choose Tensorflow.js and choose to export to the Cloud. (In the future, if you don’t want to upload your model to Google’s server you could save locally). You will get a URL and a permanent webpage to use/test/debug/change your model.

Example starter code

- Change your model url!

- Edit your triggers.js file!

Resources for Teachable Machine

- Sample javascript code on github

- Intro to Teachable Machine for Image Classification - video on The Coding Train

- Tutorial on making a “Snake game” using gesture recognition - video on The Coding Train

Final Project: Speculative Interfaces

requirements

“Where typical design takes a look at small issues, speculative design broadens the scope and tries to tackle the biggest issues in society.” –Anthony Dunne and Fiona Raby, Speculative Everything: Design, Fiction, and Social Dreaming

Rather than look just at issues of today, speculative design thinking asks “How can we address future challenges with design?”

Propose a speculative user interface for an application. Throughout the course of the project you will propose a concept idea and design brief, create prototypes, test, and document and present.

Your idea can be practical or fanciful, surprising or challenging. It is an experimental interface, pointing forward to a new future.

Keep in mind our design and prototyping processes we’ve covered throughout the semester. You may have to improvise your own new approach for your speculative interface.

Consider our readings and learning from throughout the semester including but not limited to: the early history of early interface design, interface metaphors of the desktop, ergonomics, graphical interfaces, accessibility, voice control, speculative thinking.

For next week, turn in a Design Brief including:

- a. concept stated in a short paragraph. How does it work? What is it for?

- b. list of sources - ideas, writing, concepts that are informing your design work (can also include science fiction, games, articles, movies)

- c. image references from these sources aka ‘mood board’ or inspiration

- d. sketches (paper/pen and/or digital)

- e. flowchart of interface - textual and/or visual

- f. list of resources (starter code, library, underlying technology)

References

- This is Not My Beautiful Home - essay by Everest Pipkin

- What is Speculative Design? - video

- Pointing to the future of UI - video

- Her movie - Alien Child - speculative video game - video

- Interfacing with devices through silent speech - video

- Dynamicland Hardware Field trip - video

- Virtual Reality Training for Operators - video